I have to figure out how to store both the initial state of everything for saving and editing, but also a game state for moving everything around. I thought about just making them separate but I do want to be able to trigger doors and things in the editor mode, largely because that's how dynamic lights are actually going to work. What my engine is actually going to do to make sure lighting realistically works with doors, is that all of the triggers will be baked at different states, and their deltas are turned into a sequence of triggers to alter light in a few sectors. This means it's way more dynamic than any real-time light could ever be, but it requires all the doors actually work in the editor. Having everything work in the editor is also kind of core to the idea of what I'm doing.

In the mean time, I had a horrible discovery. I went to profile the engine again, and it crashed as soon as it loaded. Something in there is dependent on being in the dev environment, so I'm going to slow down and take a good look at all of the pointers that I have so far that make sure everything is nice and tidy before continuing. If I let things leak, it'll only get worse over time. I have a file called CompileOptions.h, which has tons of commented out #define statements. I'm adding tons more for debugging specific things. I operate under the idea that it's better to leave debugging code in the source repository and remove it at compile time with preprocessor.

There is a bright side today too, because I fixed what I thought would be a very involved problem. Up until now, I was confused at why my pixels always seemed to be too big. Sometimes two columns would display almost identical things. At first I thought nothing of this, the textures themselves are only 128x128, so of course the pixels look huge. But I do two upscale mipmap passes, so in memory, I have a smoothed out 512 image, and I had the resolution set too low for it to be showing that as anything other than one pixel each. I thought it was grabbing the wrong mipmap, but I have a special render mode that spits out a color code of which mipmap is actually being used and everything looked right. It wasn't until I was pulling my hair out trying to figure out why my refraction calculations were off that I realized that I had precalculated 628 cosine functions. With a 90 degree FOV that's only 157 different angles available, so ever a super low resolution, the rays simply were running over each other. I made it 20x as precise and the problem completely went away. That also makes 3140 the max X resolution. There is no way it'll ever run that wide in real-time, but it's enough to render full quad HD. My engine is capable of hitting 60FPS, but almost every image Ive posted has been higher resolution than can run at that rate. In editor mode, most of the time, the frame rate is capped, you have to disable several failsafes to actually see it go full speed. This is because I mostly work under battery power. This is a conscious choice to self limit my hours as well as force everything to be efficient. There is no reason to render at 60FPS in editor if nothing is changing.

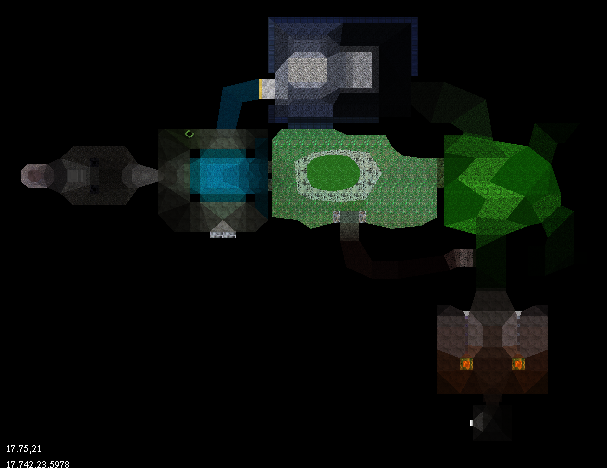

Here you can see how the light spreads from above in the map viewer.