Hello Forum,

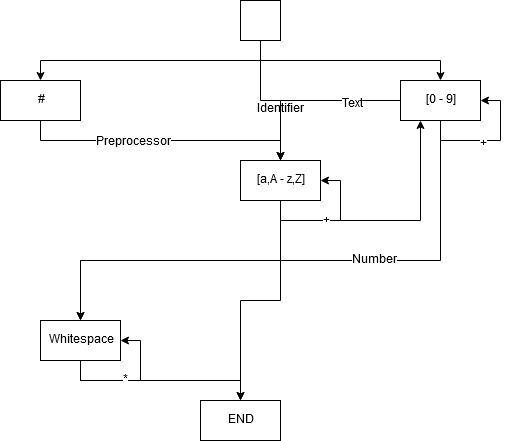

I reached a point in my rework of our build tool where I have to improve parsing different coding languages like C# and C++ to detect dependencies between files and projects. Maybe I will also add some kind of linting to the build tool. Unfortunately I'm fixed to an older version of C# to stay backwards compatible to older Visual Studio versions. This is the reason for not having the chance to use the Roslyn parser framework out of the box and I had to write my own "analyzer" to solve the task.

That I'm now ready for refactoring, I want to make it into a real parser instead of Regex driven analyzer. For this task, I also had a look at parser utility libraries like Sprache and Pidgin to have some more convinience in declaring tokens and rules. I have written parsers on my own by hand many times before and they were always optimized to the special token/ rule and usecase. For example instead of just reading the next string and then comparing it to a keyword, then conditionally reset the stream or pass the token, I wrote something like this

char[] buffer = new buffer[<max keyword length>];

bool MyKeywordRule(Stream data, TokenStream result)

{

if(data.Peek() != myKeywordFirstChar)

return false;

long cursor = data.Position;

int count = data.Read(buffer, 0, Math.Min(myKeywordLength, data.Length - cursor));

if(count == myKeywordLength && buffer.Compare("keyword", myKeywordLength))

{

result.Add(myToken);

return true;

}

else

{

data.Position = cursor;

return false;

}

}My hand written function is optimized in performance to first test the character, then fill a buffer with data that is as long as the keyword and finally test this for equality with the keyword. If I write this in Sprache or Pidgen, it will just read the stream, compare the result for the keyword and reset on failure, no early out will be performed or whatever speed optimization. If I want to test against a range of keywords or character sequences like for operators, in a generic solution it will read every statement of the sequence from the stream and test it while my hand written solution can fill the buffer once and test the result in a switch statement and early outs for the character count read.

My question is if there exists a solution that can perform both, define rules in a convinient way like Sprache or Pidgin and on the other hand compete somehow with a hand written solution (it must not be as fast but in any way faster than simply replay the parts of a rule). I thought about a solution using C# Expressions where an operation for example my keyword, can be written as a sequence of pre-condition (testing the character), bootstrapping (reading the buffer for certain size) and validation (test if the buffer matches the keyword) and be merged together for example, so that a rule "A or B" dosen't test for a first then resets the stream and tests for B second but merges the pre-condition into

IF not('A') and not('B')

FAILfills the buffer only once and then tests for equality in a switch-case statement. Or is it the best solution to keep writing such code by hand for each individual rule?

Thank you in advance for your suggestions experts!