An interesting thing I noticed in the behavior of my dog. When he is blocking the way when I want to pass somewhere, we moves aside as soon as I get close to him and it`s obvious to him that I want to pass to the other side. What this means is that the dog runs a predictive routine in his head, he can figure out where I`m headed, that he is standing in the way and thirdly the direction he needs to move on to to get out of the way.

Comments

avoidant personality disorder

I can`t tell if you`re making jokes or that`s an actual disorder that dogs can have. I`m sure what I`m describing is a common behavior to all dogs not just mine.

The hard part is to sense the environment for other obstacles, to include them into the motion planning process. The easy and brute force way would be to render small framebuffers with depth

You can use the RTS mindset. Even if you have a first person view only perspective you can still `imagine` a world seen from above. Like when you see objects from the FPV you can imagine how they fit into the rts map. When you can`t see all sides of an objects you can approximate. Like if you see a car on the street from a determined FPV angle you know it doesn`t spawn forever on the side you don`t see, you know the approximate shape of a car so you use that on your imaginary map, some thing goes with a house or any other object. To navigate you just split the map into sectors and make the sectors with objects on them unreachable.

Maybe it's not a dog. Some foxes actually look like dogs. I've seen foxes act like what you describe.

Ironically it's my dog which looks like a fox. And i'm sure it's my dog which has the mental issues too. From my observation, almost all dogs have one or another serious disorder issue.

Outsmarting dog experts always say that's the owners fault on bad education. But i think domestication only gets us this far.

Even if you have a first person view only perspective you can still `imagine` a world seen from above.

Think we are in a skyscraper with multiple floors, but those floors interleave in height and are not nicely separated. Also, there was an earthquake, so the floors now are bent, broken, even intersecting.

The NPC takes cover behind a wall, and this wall has a small hole. Large enough to shoot through it while keeping covered otherwise.

In such situation, a top down approach would not work at all. We want some general 3D representation of environment. We want a way to find the hole.

Maybe the framebuffer isn't that bad. At least it's 2D as well, but can capture all the relevant things.

For a Terminator robot, it would just work. Because each robot has its own computer. But in a game we have many NPCs and just one computer.

Though, UE5 Nanite for example is efficient at rendering many views. The idea might soon become an option to AI. We can timeslice and reproject to optimize. NPC views need no fancy lighting either.

But for current standards you're right and top down representation is widely used.

I remember a related example of a fluid monster in Gears 5. The fluid simulation was top down 2D, but it could even cross multiple floors of the building, which they achieved with some layering tricks.

So if you are willing to deal with the design restrictions, the approach can get quite far and keeps efficient.

Think we are in a skyscraper with multiple floors, but those floors interleave in height and are not nicely separated. Also, there was an earthquake, so the floors now are bent, broken, even intersecting.

That`s extreme but IMO even in this scenario partitioning space into a grid is the correct approach. A multilevel building is communicating overlapped RTS maps. If an object defies the grid (like the object goes across the map in diagonal) and you need to work with that particular object you just use a separate matrix/reference grid that alines well with the object (the object fits/falls into a column of sectors) By belief is that we and animals think how a computer would think. We function by organizing space in our heads. Splitting the space into a grid is the only way to make sense of it.

If an object defies the grid (like the object goes across the map in diagonal) and you need to work with that particular object you just use a separate matrix/grid/point of reference that alines well with the object (the object fits/falls into a column of sectors) By belief is that we and animals think how a computer would think. We function by organizing space in our heads. Splitting the space into a grid is the only way to make sense of it.

Agree on the mental model of space in mind, but unfortunately making a discretization of such model is very hard. The grid approach you propose is the typical programmers first thought, but it's either naive or inefficient, depending on grid resolution.

Taking your example of an angled object, requiring a second grid aligned to its orientation, has multiple issues:

* How do you handle the overlap of multiple grids? How does a path know it has to switch to another grid from a certain point? Links between grids and cells? How to generate those links robustly?

* What's the proper grid orientation for an object with a triangle shaped base? No matter how we rotate it, one edge always is jaggy. We can minimize the jaggyness and distribute the error to all edges, but then all edges are still jaggy.

That's a rabbit hole of problems, complexity, and never ending issues.

At this point it's much better to reject ‘efficient’ grids, and use a irregular representation instead. Like a BSP tree for example.

Or you use grids at very high resolution, so jaggyness and inaccuracy is no issue.

The current standard solution actually is a navmesh, in case you don't know: https://github.com/recastnavigation/recastnavigation

That's

(forum crash)

… That's pretty fine for path finding, but does not handle sensing. And we need sensing for next gen AI. Path finding gets you from the kitchen to the bath, but it does not help so much to get natural combat.

We could extend the idea by covering the whole level with a connected mesh. I even have the tool to generate this, so i could experiment.

On my hole example, i could trace a ray from NPC to player. It hits a wall. Than i start paths from the hitpoint on the mesh and i would find the hole.

But this would mean much more path finding than we do now, and it's expensive. Plus, it's still a bad abstraction, not similar to our (or dogs) mental models. And a full surface navmesh still does not work well for flying enemies.

Probably i have to befriend the framebuffer idea…

On my hole example, i could trace a ray from NPC to player. It hits a wall. Than i start paths from the hitpoint on the mesh and i would find the hole.

that`s not how things are working in real life. In real life one has vision and is able to identify the hole because visually the hole is different than the rest of the wall. Once the hole is detected the person can estimate the distance from the hand/weapon etc. and is able to navigate the weapon in the appropriate position. In a simulated environment you`d have to mark the hole with a gizmo/helper object in the editor. At run time the NPC would just read the gizmo position/data. Not much to sort in a scenario like this. Things get challenging when you have multiple choses, i.e. the wall has three holes or more.

that`s not how things are working in real life. In real life one has vision and is able to identify the hole

Yeah, that's why i think the NPC framebuffer becomes unavoidable. Navmesh for the coarse tactics and navigation, visual sense for actual behavior.

About gizmos, that's manual design work which has ups and downs, but it's restricted to static stuff.

It would be cool if some grenade generates the hole, NPCs sees opportunity, may even need to crawl down or stand on a desk, to reach the hole so he can shoot at you.

The fun would not come from the ability to shoot through holes, but from the observation of the NPCs smart behavior of reaching and exploiting the hole.

This also applies (probably mainly) to the player avatar in a third person game. We may have smart robotics systems to plan and execute such actions, but we will lack the interface for the player to control them. M+kb or joypad won't suffice.

Thus, smart characters is the only option, and watching automatic behavior must be predictive, fun and entertaining, not a frustrating lack of control.

All my game design ideas circle about this stuff. My vision is still vague, but i clearly see big opportunity of a new action genre. Promising enough i'm willing to sacrifice beloved first person if it works out… : )

Things get challenging when you have multiple choses, i.e. the wall has three holes or more.

Yeah, many things seem just too difficult. But we don't need perfect intelligence, just something allowing to introduce new mechanics.

"It would be cool if some grenade generates the hole, NPCs sees opportunity, may even need to crawl down or stand on a desk, to reach the hole so he can shoot at you."

what I can think of is once the explosion takes place the places where fragments create holes (something that could be set through a randomness generating routine) could be automatically labeled as holes so that when a character is looking for 'holes' the data would be there ready to be served (like in an easy fashion, with no searching involved), the holes become this way just game objects you can interact with.

what I can think of is once the explosion takes place the places where fragments create holes (something that could be set through a randomness generating routine) could be automatically labeled as holes so that when a character is looking for 'holes' the data would be there ready to be served (like in an easy fashion, with no searching involved), the holes become this way just game objects you can interact with.

That's possible, but beside the difficulty of labeling things (what makes a hole a hole?), you also narrow yourself into a predefined set of potential options and actions.

For the former, it's incredibly difficult to make computers associating things. Especially if it's about 3D geometry. The explosion will create all kinds of unpredictable fracture, but only a few changes will affect potential tactics.

So we could do things like searching for holes which opened between two different rooms. Though, we try this for decades, with the application of portal based hidden surface removal to optimize rendering. Solutions are based on random sampling with raytracing, rasterizing many views, or making voxelizations. That's not realtime, and probably no option. Also, we need a definition of ‘rooms’ to begin with. And every time we force ourselves into a need to classify / label stuff, we end up restricted and limited, and adding a new source of erroneous data causing bugs.

The latter is even more important: We must give the player true options.

What's your options in current games? You can choose your hairstyle, which dos not affect anything.

You can choose to invest skill points either in strength or magic. Which is implemented in some abstract pen and paper mini game within the main video game. The interaction between those two games goes both ways, but the games remain clearly separated. They do not fuse. RPG elements happen on GUI screens, action and simulation happens in the 3D world. That's not how we ‘merge genres’ successfully. The RPG crap is just bolt on nonsense to pretend options, to show off all the things we actually fail to deliver. It's like proudly committing how incompetent we are.

It's the same as with cutscenes: We interrupt the game, force the player to pause, to show him how good we became at imitating Hollywood. Is that our preferred method of story telling in a game? By interrupting the game? Seriously?

No. It's the same thing: Showing off what we can not do, what we fail at, and then being proud upon our shitty presentation, after having spent millions on all that not-game-crap.

I have diverged into rant : ) There are ofc. games which provide better options. You can decide trying not to kill anybody. You can decide to sneak past the guards, or just run havok and kill them all. You can try to find another route, to solve the mission more efficiently. Things like that. That's proper options.

But all those options have to be predetermined from its designers. It's a static set of options of limited size, so the players options to express himself, being creative, to play the game the way he likes, are still limited. It's not true options. You can only choose your way through a predetermined graph, but you can not change this graph. So the given options are just an illusion, forcing you to actually reduce the game, instead creatively extending it.

Imo, only options which emerge from the games simulation are true options. And to allow this to happen, we must minimize any form of labeling and classification, if possible.

For the hole example, that's easy: In the NPCs framebuffer, AI finds the player ID simply by iterating pixels. We don't need a mental definition of ‘a hole’ at all. We only need to calculate a pose to eventually attack the players pixels.

The hard part maybe is to turn the short timed opportunity to attack into a persistent objective. Even after the moving player has gone out of sight, the NPC should keep trying to reach the hole, because chances are the player can still be hit after coming closer to the hole. So we would need to track some kind of path: NPC→hole→player. The hole could be tracked under motion from the pixels showing larger distance than the wall surrounding it.

Though, such tracking won't be very stable. So i realize you're right.

The NPC could add a persistent label after detecting the opportunity once.

The label should end up at the wall nearby the hole, because only the wall provides a robust space after tracking multiple frames. We can not track the empty space of the hole itself i think.

But after that, by analyzing nearby pixels from the label, we'll always re-find the hole again in the future time span.

That's actually some good idea and progress… AI creates labels from sensing and keeps them persistent for some time.

We could connect labels, form a graph, do path finding on them, etc.

Neat. I'll add this to my vague and so far amateurish understanding of game AI… thanks! :D

Maybe the frambuffer idea could be useful for top down RTS as well.

We could generalize it this way:

That's the view from the tank. The data would be a 1D array, with a pixel on every degree. Similar to how early raycasting games like Wolfenstein generated their visuals.

It would give occlusion information. For example, if a unit is behind the house, the tank would easily calculate: ‘i can not see that’.

To simulate some kind of perception, we could analyze for depth discontinuities.

E.g. by making downscaled min and max mips. Where the difference between min and max is large, we have an edge, something we may want to avoid when moving, something we may want to observe if enemies come to sight, etc.

By blurring the absolute difference, then following to the local maximum, we can quickly find the closest ‘interesting feature’ from every angle.

By connecting all local minima and maxima on clockwise order, we get a simplified scene representation to process very quickly.

Using mips we can even have hierarchical representations for even faster processing.

We could use this to update only a small set of unique pixels per frame, after reprojecting the whole image. We know which regions are important, so we do more updates (rays) at those regions. So generating the data is not that costly.

Would such data enable some kind of better AI? For the 3D case i'm sure, for 2D not so much. But maybe.

for 2D not so much. But maybe.

Can't stop thinking about this crap… ; )

I think it would work well. Think of a simple top down twin stick shooter. You can walk with WASD and aim with mouse to shoot. There are enemies and boxes for obstacles. No grid, no path finding.

Each NPC has this 1D view. If he sees the player, he looks for the nearest cover and hides behind that, but strafes out of cover to shoot. Then gets back to cover.

He also tries to get closer to the palyer. So the boxes move relative to him, generating dynamic tactics of taking cover / shooting, and also generating natural curved paths.

If the player goes out of sight, a label is generated, and the NPC goes to that label in hope to see the player again. If not, label gets deleted and he starts routine of random walks.

I think this would be pretty nice, and maybe just better than a path finding approach. Little work to test. Maybe i'll add this to my ‘side quests’.

Surely has been tried before…

just as a side point I`m having a fun time following you, how you`re laying out your ideas and how you`re deriving ideas one from another.

If we talk 2d games vs 3d games: IMO when we`re playing games we`re using the same problem solving engine regardless if we`re dealing with 2d or 3d.

No grid, no path finding.

Each NPC has this 1D view. If he sees the player, he looks for the nearest cover and hides behind that, but strafes out of cover to shoot.

For you it`s no grid no pathfinding, A NPC imitating a behavior like yours would need some kind of structure (grid, path finding etc.) to achieve a viable result. I not into the FPS genre but even a FPS has some type of waypoint based system for moving around (based or not on a grid).

Since I`m a RTS fan my goal is to build an RTS AI that can micro-manage units like a pro RTS player would (in a way a Stacraft gosu player would for example). I have had always a secret admiration for what top end zerg players can do.

For you it`s no grid no pathfinding, A NPC imitating a behavior like yours would need some kind of structure (grid, path finding etc.) to achieve a viable result. I not into the FPS genre but even a FPS has some type of waypoint based system for moving around (based or not on a grid).

Waypoints, navmesh or grid is all the same - a graph, which we use a path finding algorithm on. This lets us solve difficult problems like finding the shortest way out of a labyrinth. That's something humans can not even do. And the paths are tied to the spatial structure of the graph, so they contain corners not giving natural trajectories. We can smooth the paths, e.g. using some funnel algorithm. And we can add avoiding moving obstacles or dynamic objects we find nearby the path.

Notice, initially we have coarse path, and we spend extra work to turn it into more natural behavior on top. The path itself does not help with behavior at all. This includes obstacle avoidance, taking cover, picking up power ups or ammo, strafing to dodge shots, flocking behavior for a unit of NPCs, etc. All this is modeled with sensing the close range environment.

Usually both methods are combined. But what would happen if we disable one or the other?

Removing path finding, in a FPS you would not even notice a difference. You lack oversight, so you can not observe the long term goals of an NPC. Like going to some nearby pub to have a drink. We would need to stalk the NPC to find out it's goal.

Removing behavior, any NPC would appear clumsy and artificial. They would run into each other, walk in strange jaggy paths, ignore the player, not reacting if being attacked, run against the closed door as the reach the pub.

So for a FP / TP 3D game, behavior is much more important than path finding, and it's also much more difficult to get right.

For top down RTS, it's more the other way around, because long term goals of building some base at a certain location are most important. Close range behavior is mostly missing. The units don't take cover. They just stick at their path and soot while being shoot. They act pretty stupid and lifeless, but this way they do not distract the player from predicting their eventual final goal. (that's at least how i remember RTS from the 90's)

It's details like that why merging genres isn't easy.

Found some related work, illustrating some advantages of simulated vision:

So for a FP / TP 3D game, behavior is much more important than path finding

that`s because in a FP the main character doesn`t do anything on its own. You are controlling everything the character is doing. If we leave the main ch aside then all the other characters don`t have much of a behavior other than shooting at you and trying to get close. Some characters may display staged behavior, but that doesn`t really count as 'behavior'*. Once you want to get past that stage things tend to resemble how things would work in a RTS.

* Basically behavior falls in one of two branches: moving around the map and moving body parts. Moving around is true behavior, moving body parts (other than combat main ch may also trade, take a health vial, and do a few other things which are visually represented as well but these actions a limited to the main ch only) is just predefined animations you can`t call behavior. So basically other than combat there really isn`t any true behavior in a RPG/FPS. In a RTS the number of aspects that involve AI controlled units that could be labeled as behavior is slightly higher. That`s my (cheap) take on things.

So basically other than combat there really isn`t any true behavior in a RPG/FPS.

Depends. I've played CP2077. Enemies behaved like tanks, bullet sponges, not even moving. Not sure if updates fixed this non-game.

But there are good examples too. Like GTA VI, which used Natural Motion middleware. Behavior of pedestrians was very advanced. They dodge, run away in panic, nice stuff. One of the funniest moments i've ever had in games was when i did hit a shop keeper by accident with some object i have thrown. His physical response was so natural, and because i did not intend to throw stuff at him, i was baffled myself, just like him, and i felt stratled and even sorry.

But i can't judge RTS. After i saw a Doom clone, 2D and top down was dead to me for two decades. So i missed Starcraft or C&C. ‘Z’ on Amiga was the only RTS i've ever played. I liked it. It's a proper computer game genre.

My brother showed me Starcraft pro players on YT, but just from watching i did not understand anything.

This distinction you`re making between 3D and 2D (in the way that 3D is way more offering for AI) isn`t well grounded IMO. If you ask me 2D is just stripped down 3D in most cases (side scroolers, arcanoid and space invaders aren`t included). Main genre (RTS, RPG and even FPS) 2D games are just as offering for AI as 3D ones.

In my limited view the AI and 3D make a unique blend only when simulating real world stuff on computers.

This distinction you`re making between 3D and 2D (in the way that 3D is way more offering for AI) isn`t well grounded IMO.

That's totally not what i mean. It took me decades to realize the shortcomings of 3D, and what has been sacrificed for its immersion.

In recent years i play a community driven 2D Super Mario clone with my wife in splitscreen. Actually i do not play much other games, often none for weeks.

And this old Mario game now seems the best game of all time to me. It works by predicting paths of enemies or platforms and reacting to that in time.

3D can't do that. You don't see your feet, perspective projection ruins prediction and estimating distance, so precise jump'n'run gameplay is not possible.

Mario also has some tactics and a very rich interaction model. You can pick up stuff, carry it around, stack boxes to climb up, throw shells in angles at enemies.

3D can't do tactics, because it lacks oversight. It can do rich interaction, but for some reason most devs fail to implement, and so they do it using abstractions instead. Inventory, crafting, all the GUI shit.

Mario implements all its features within its world simulation. Even to use your power up you already have, you drop it from top screen HUD, but then you must first catch it to get it.

Levels can be very dynamic. They can constrain you in time and space. Gameplay is very varied.

So i'm not praising 3D in general. It can only one thing really good, which is immersion. But game mechanics work much better in 2D - i'm aware.

AI in a tactical sense is also more relevant in 2D, because oversight. Oversight is the key problem of 3D. I have an idea to fix this, but it's quite extraordinary - need to try it out.

Regarding behavior, 3D is more demanding on that, because of it's immersion, realism, and detail at all scales.

I would say, as an immersive 3D games dev, it's me who has to think more about Terminator robots, not you. (individual characters, how they act in detail, how realistic their motion looks, what their motion can express to the player, character centrism)

You, working on top down RTS, have to think more about Skynet. (the greater plan over groups of characters, efficient long termed strategy, weapon assignment problem, resource management, politics)

It really depends on how everybody defines the meaning of ‘AI’ for himself. It's always a bad term for what we actually do for real, til yet.

And in the end, all genres will merge anyway.

Actually i do not play much other games, often none for weeks.

same for me. I`m not playing much games either. I`m playing Starcraft 2 now and then as an exercise, it prevents me from feeling too old which is somewhat odd, video games were my religion at one moment in my life.

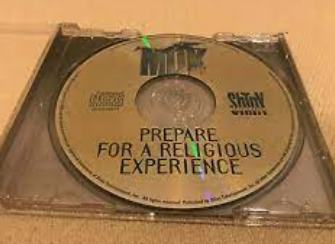

I asked a colleague at work to buy MDK for me when he is at the city, and i said ‘This game will be my new religion! Bring it to me!’

And when i opened the case, i saw this:

:D Great times, back then.

Are we too old, or are current games too bad? I never now.

I would like to play more games. But i rarely find one worth to play for more than half an hour. It would help with motivation.

Even if we are too old, then why are there no games for grown ups? We are a huge market? I'm disappointed from the industry and feel left behind.

But there is at least one good game per year still. Currently i like Elden Ring. Nice world and exploration. They really know how to reward you with a nice vista after beating the boss, and then you can walk right in…

Sure i only like it because i play with trainer in god mode. So i ignore all the RPG features and will again not learn what's the fuzz about RPG, and why people like it so much ; )

I asked a colleague at work to buy MDK

MDK? brings back (dear) memories.

I was saying I haven`t been playing games in recent years that`s not that accurate, I did try some facebook games, that counts as video games too.

Something similar to what you have posted

Don't worry. Carmack is still with you. While you play FB games, the AGI he develops for FB manipulates your mind with subtle background ads.

This can go as far as you're suddenly ordering a Barbie, although you never wanted one ;D

Don't worry. Carmack is still with you

there is nothing religious about version 2 I think.

Since we`re talking religion allow me to express by belief with regards to the issue of faith and building robots that act like humans. I know my statement might offend these days but I think that when you`re building 100% autonomous human like robots you need a strong grounding in (Christian) faith. Building H L R is pretty much playing god. You need to be a servant of strong ( read old fashioned ) values to get all the way trough.

[edit] JoeJ if you feel like keeping the discussion going let`s move to the new blog entry I made. This entry is already too cluttered with comments.

My dog isn't like that. More the opposite. It successfully predicts and also memorizes my routine paths, just to block my way all the time with remarkable precision, risking me to stumble over it.

However, predicting trajectory from given velocity is easy. The hard part is to sense the environment for other obstacles, to include them into the motion planning process. The easy and brute force way would be to render small framebuffers with depth for the AI agents, but both generating and processing such data is very costly. I'll stick and some raytracing to sample the environment, which will miss some details. Thus AI - just like eventually graphics or aiding player perception - dictates we should use simple and clear scenes, avoiding noise.

Not really a big problem for top down RTS on simple hightmaps, which can not even represent geometric complexity. So your're lucky.