What Happened Previous Part

1- Learned what a 3D scene is consisted of.

2- Learned How camera works, the concept of projection and view matrices.

3- Learned How a scene is drawn and a hint of what vertex and fragment shaders do for us.

4- Learned some vector math.

What Will Happen In This Part

1- Will learn what API is.

2- Will learn what vertex shader does for us.

3- Will learn what fragment shader does.

4- Will learn the basics of shading and BRDF (Bidirectional Reflectance Distribution Function).

5- Will learn a simple BRDF.

APIs

An API (like OpenGL and D3D) are interfaces that let us interact with the GPU. Modern APIs provide us with two important programs, a vertex shader, and fragment shader. So normally the geometry is passed to API (as said in previous part the geometry is consisted of a set of vertices), then the API runs the vertex shader program to for each of the geometry's vertices (where as said in previous part the geometry is transformed and projected), then after that the API divides the geometry to several fragments/pixels (the surface of the triangles/polygons of the geometry is divided to several fragments/pixels, this is done by interpolating the coordinates of the vertices in 3 dimentions) this is called rasterization.

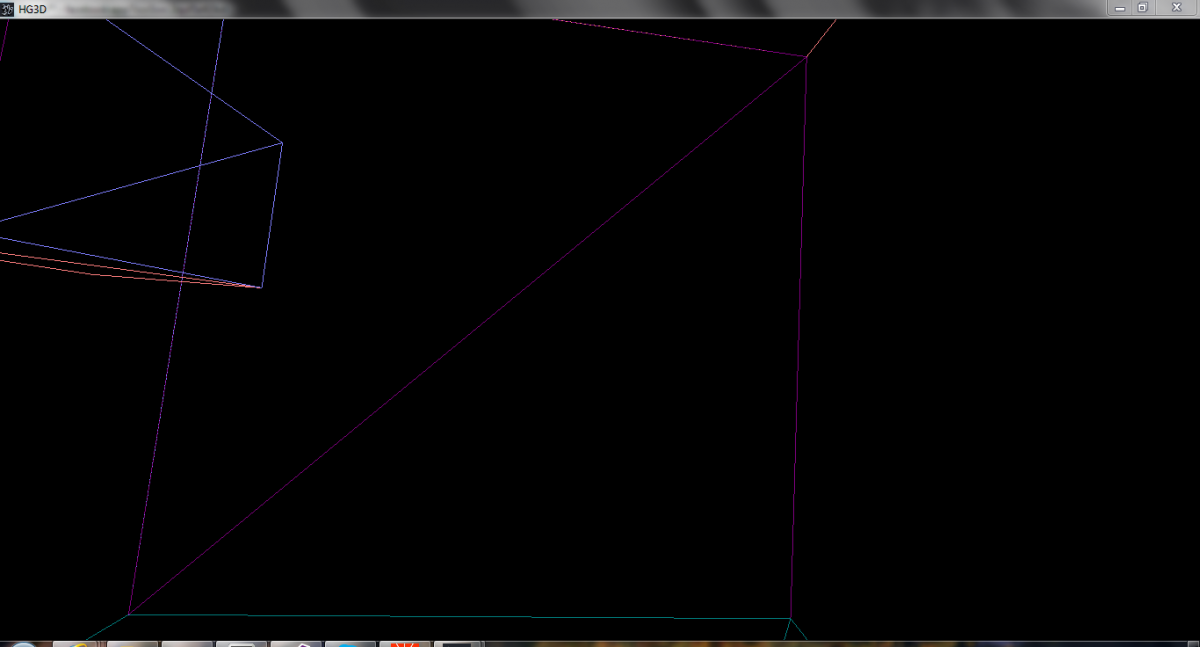

Figure 1-1 The top picture shows the geometry in wireframe view and the other picture shows the rasterized geometry (each pixel in the picture is fragment)

Now after rasterization the API runs the fragment shader program for each fragment/pixel, the fragment shader then decides what the color of each fragment/pixel should be and then passes the color to API and API draws the final image.

These several stages in the API is called pipeline, there are programs other than vertex and fragment shaders, but they aren't important for now.

Vertex Shader

There are five types of memory that are accessible in vertex shader:

Vertex Attributes: They are read only variables and are sent from CPU side and can't be changed (even from CPU side) after being sent, these will define our geometry's vertices (as the vertex shader program runs for a vertex these will contain the data of that vertex).

Uniforms: They are an other kind read only memory sent again from CPU side but their value can be changed (only from CPU side).

Textures: These are not really read only memories since new version of APIs allow writing directly to textures from inside the shaders, (we also can draw stuff to textures), probably you can set the textures and read on CPU side and again read them in shaders.

Normal Variables: These variables are defined and used in shader program.

Varying Variables: Varying variables provide an interface between vertex and fragment shaders,, though the "varying" key word is no more used I still prefer this name for them, any ways these variables are set in vertex shader and read in fragment shader, there are several kind that will be discussed later.

Now using data sent to the vertex shader, the vertex is transformed and projected, I'm going to put some GLSL (GL shading language) code here (since I will use OpenGL for these series, but still the basic ideas are the same).

A simple vertex shader:

#version 420in vec3 Vertex;//vertex attributeuniform mat4 ModelMatrix;//sent as uniformuniform mat4 ProjectionMatrix;//sent as uniformuniform mat4 ViewMatrix;//sent as uniformvoid main(){ gl_Position=ProjectionMatrix*(ViewMatrix*(ModelMatrix*vec4(Vertex,1)));}mat4: 4x4 matrix

mat3: 3x3 matrix

vec4: 4D vector

vec3: 3D vector

Now for projection we have a view matrix and a projection matrix, a third matrix is normally used to scale, rotate or translate the model before projection, this matrix is called model matrix. These matrices are send to shader as uniforms (check the code), the vertex position in 3D space is sent as 3D vector. Then it's transformed by the model, view and then projection matrix (which together make the MVP matrix). The vertex data is sent to the shader using the Vertex attribute. To project the vertex, the main() function is called by the API and then using that function we first transform the vertex to Homogeneous Coordinates, since each vertex is a point this is done by setting the forth component to 1, done as:

vec4(Vertex,1);The we project this vector as said, and then the projected result is sent back to API this is done by passing the projected vector to gl_Position.

Fragment Shader

There are five types of memory that are accessible in vertex shader:

Output Variables: These variable are used to return the fragment color calculated by shader to API.

Uniforms: Same as vertex shader.

Textures: Same as vertex shader.

Normal Variables: Same as vertex shader.

Varying Variables: Same as vertex shader.

A simple fragment shader:

#version 420out vec4 Output1;//the final color is set to thisvoid main(){ Output1=vec4(1);}Now after the vertex shader has sent the projected vertex data back to API, the API does the resterization and then it runs the vertex shader for each fragment (like vertex shader this is done by calling the main() function by API), now for each fragment a color is calculated and then is passed to the Output1. This fragment shader sets the color to white for every fragment.

BRDF (Bidirectional Reflectance Distribution Function)

BRDFs are functions that determine how the light changes when it hits a surface and then reaches the eye. These functions depend on the material of the surface. There are three kind of light, for each we have different BRDFs, these three kind are specular, diffuse, and ambient lights. Specular light is the sharply reflected light that has a direction, and the reflected light is stronger in that direction, diffuse light is spread equally in every direction, and finally the ambient light is the light present because of several bounces of the light from the surface of the objects, it's mostly the same at all over the scene.

A Simple BRDF

I'm going to explain the diffuse BRDF in this section, since this BRDF is almost the same every where. Now let the incoming color of the light be C(R[sub]C[/sub], G[sub]C[/sub], B[sub]C[/sub]), and let N be the perpendicular vector to a point, and let the L be the direction (normalized) vector from the point to light, and let the A(R[sub]A[/sub], G[sub]A[/sub], B[sub]A[/sub]) be the albedo (reflection coefficient, in other words the color of the surface) of the surface, and I(R[sub]I[/sub], G[sub]I[/sub], B[sub]I[/sub]) the final intensity of the light, we have:

I = C.A.dot(L,N)

This is called the Lambertian method.

Thanks for reading, in the next part I will make use of what ever we have learned from the beginning using the OpenGL API. Comments are welcome so feel free.